Forecasting is a crucial component in decision-making. In order to make good decisions in the present, we must be able to, at least with some accuracy, anticipate future events and the consequences of our actions on those future incidents.

Time series forecasting

Machine learning can be applied to time series data in order to help us forecast the future and make optimal decisions in the present. A time series is any set of values which have been measured at successive times and placed into chronological order. There is an enormous array of examples of such data drawn from incidents that affect us on a daily basis. Some of these examples include weather data (such as wind, temperature, and humidity), solar activity, demand for electricity, and financial data (such as stock prices, exchange rates, and commodity prices).

The time ordered structure of these datasets allows us to apply new tools when making predictions. For example, we can study the correlations between past and present values of a series in order to make forecasts of the future. However, we must tread carefully, since in this situation it’s very easy to make mistakes such as training a forecasting model to use data that won’t be available at the time the forecast is to be produced.

In this blog, we will discuss these challenges with reference to the Dow Jones Industrial Average (DJIA), and how we deal with these challenges using the evoML platform. Readers can further apply the fundamental concepts and evoML in other time series projects.

Data pattern changes over time

The core assumption of supervised machine learning is that patterns learned from a set of training data can be applied to a new unseen set of data. Often this works very well: this assumption is the basis of science in general. We make observations, find a model to explain those observations and we use that model to predict future behaviour. For example, using Newton’s laws of motion and gravitation we can forecast the movements of planets quite accurately. Although we now have better models, this Newtonian model is as accurate today as it was in the 17th century.

But we cannot always take this stability for granted. Many machine learning models relate to patterns of human behaviour which can change over time, and this means that models trained on old data will not necessarily be valid in the future.

Forecasting the Dow Jones Industrial Average

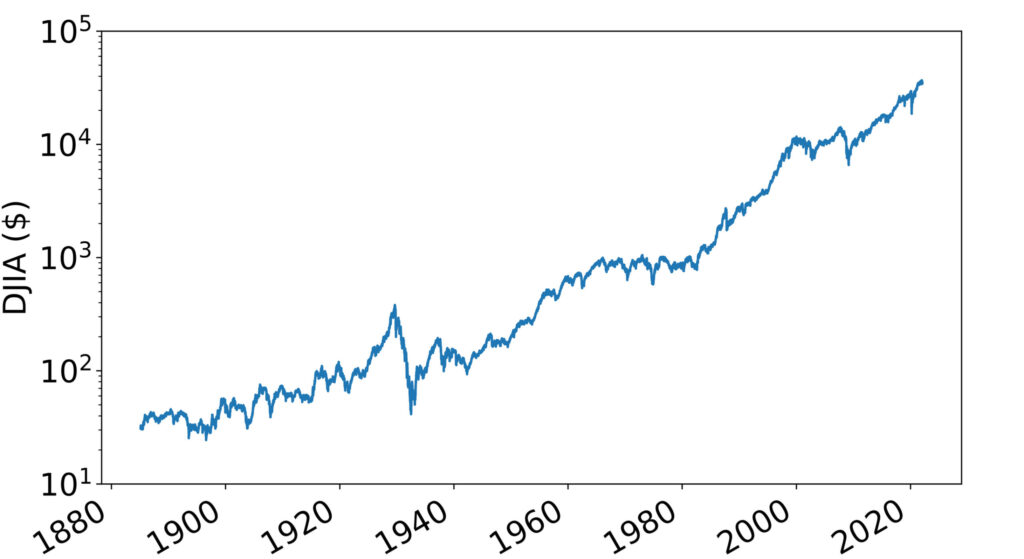

The Dow Jones is one of the oldest stock indices in the world, and has a history dating back to 1885. It provides a weighted average of the stock prices of 30 large publicly traded American companies. We can retrieve the daily closing prices of the Dow Jones from TradingView.

Figure 1. Daily closing prices of the Dow Jones

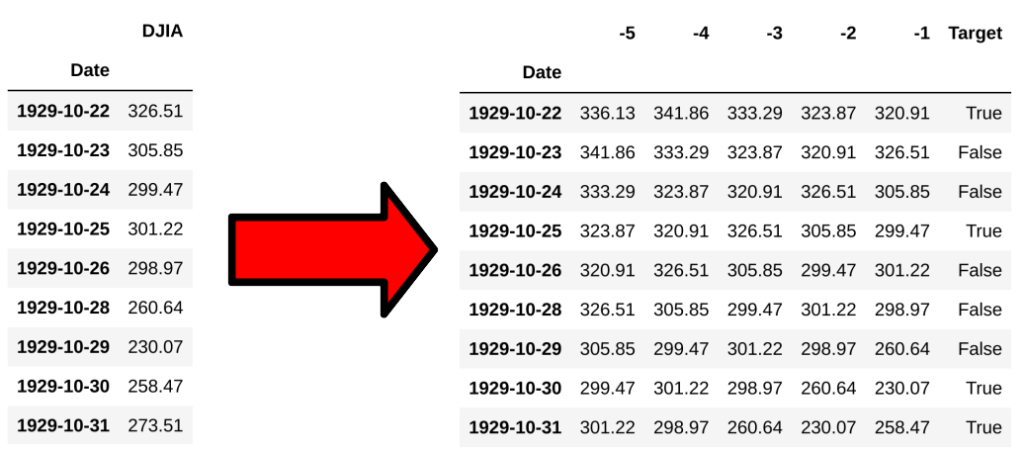

Now we ask a simple question: will tomorrow’s closing price be higher than today’s?

This reduces the forecasting problem to binary classification. In order to train a model we must generate the features and the target from our data. To predict the trend in closing prices we will use the previous five days of closing prices (not including weekends and holidays when trading is halted).

Before we train a classifier to predict the trend, we must be careful about how to measure the accuracy of our model.

Figure 2. Previous five days of closing prices

Why K-fold cross validation doesn’t work

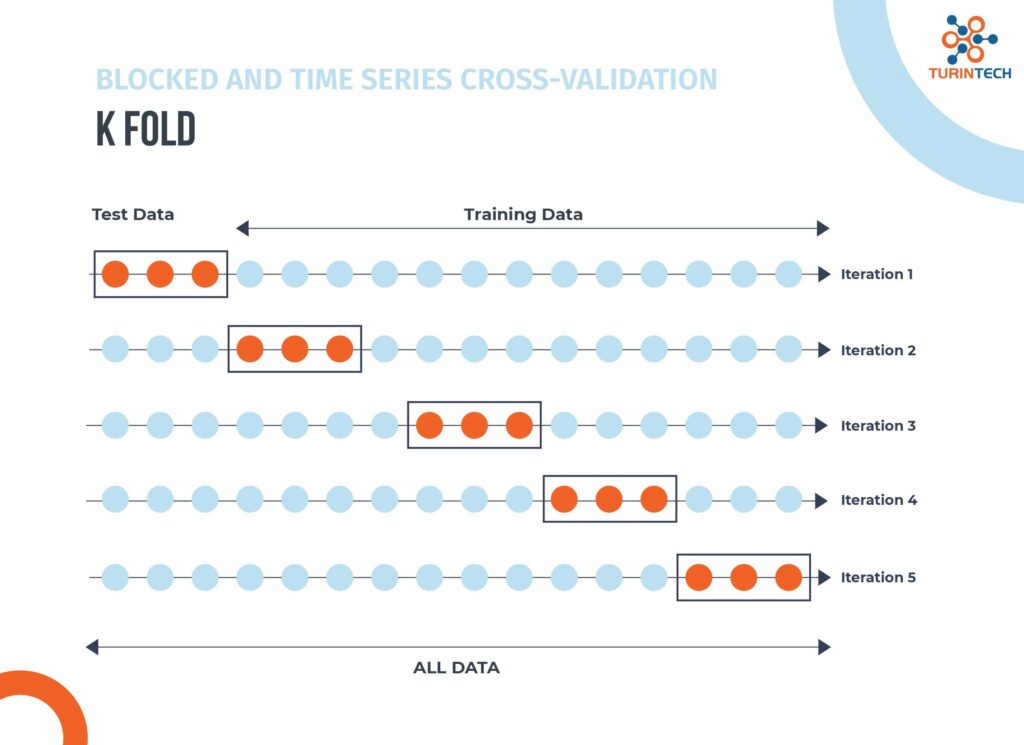

Usually, one of the most popular techniques for this task is k-fold cross validation. In this procedure the training data is first divided into k separate blocks. We then have k rounds of training and validation in which each one of the blocks is set aside, the model is trained on the remaining data, and its performance is evaluated on the block we excluded. Evaluation metrics such as classification accuracy can be averaged over the k rounds to give an overall measure of the performance of the model.

Figure 3. K-fold cross validation.

However, when we consider time series data this procedure must be modified. Every time we evaluate a model we must be sure that the procedure we are using reflects how the model will be used in practice. Clearly, if we are training a model to tell us the future, we will only be able to train it on data we have collected before the forecast is made. Yet if we use k-fold cross validation then some of our validation data will precede the corresponding training data.

Validating a model in this way could give an inaccurate picture of model performance by allowing the model to learn future information to predict past behaviour of the time series. This kind of bias will be a problem especially if factors governing the dynamics of the time series (for example, factors such as interest rates, exchange rates, etc.) change over time.

Chronologically ordered training and validation

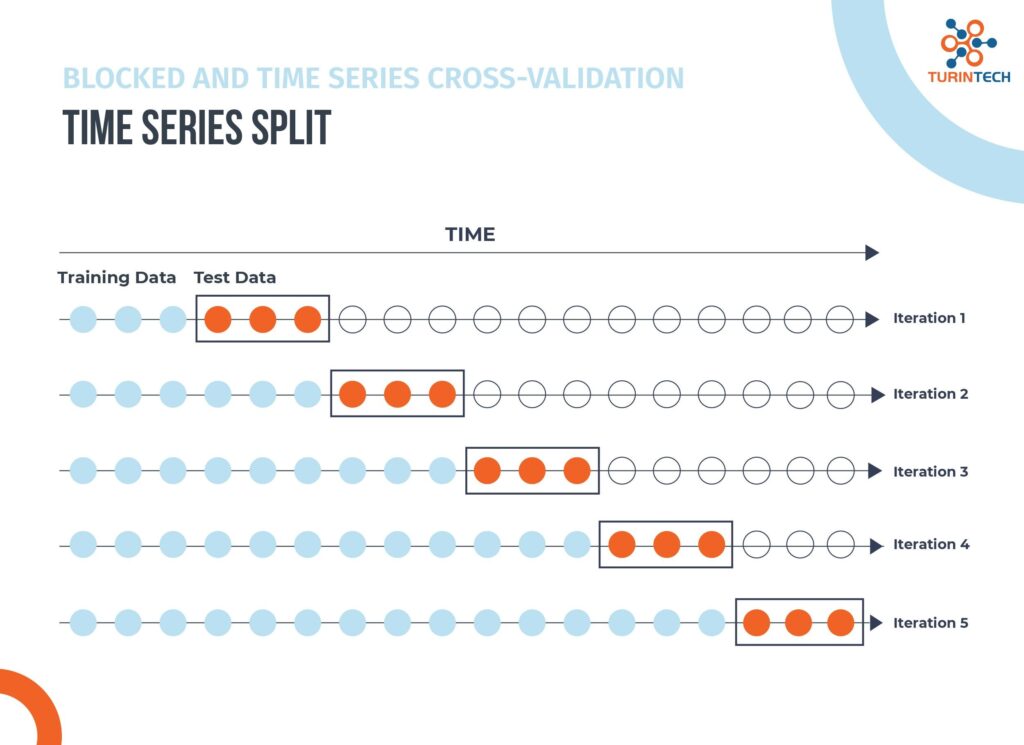

The alternative we apply is to use chronologically ordered training and validation blocks. As in k-fold cross validation we divide the data into k blocks, but we now go through k-1 rounds of training and validation. We start by training on the first block and validating on the second. In each subsequent round we expand the training set by adding the previous validation block to the training set and validating on the next block until we have covered the entire data set. This allows us to validate our model over many different windows in time while always training it on past data.

Figure 4. Time Series Split.

Parallelise ML Models Training to Find the Best Ones

Using the evoML platform, we can quickly create a trial to compare and optimise hundreds of models simultaneously.

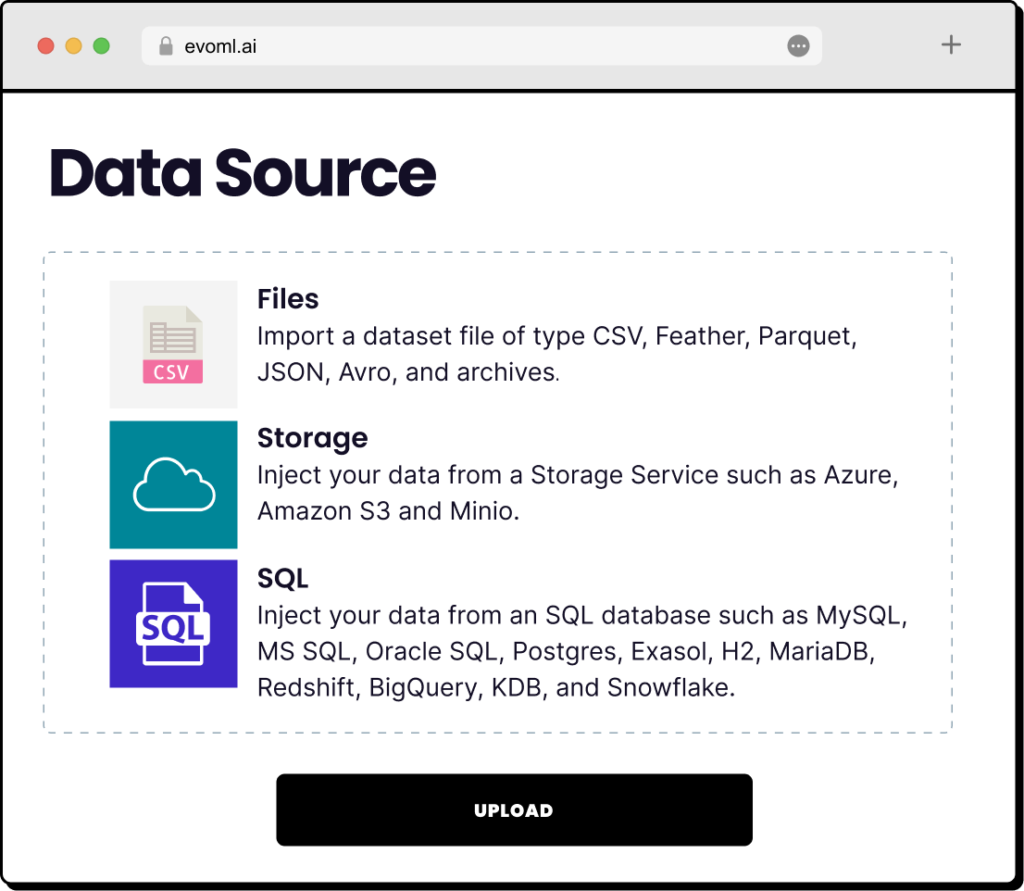

We can simply drag-and-drop Dow Jones data through a code-free interface on evoML platform.

Figure 5. Import your data from anywhere

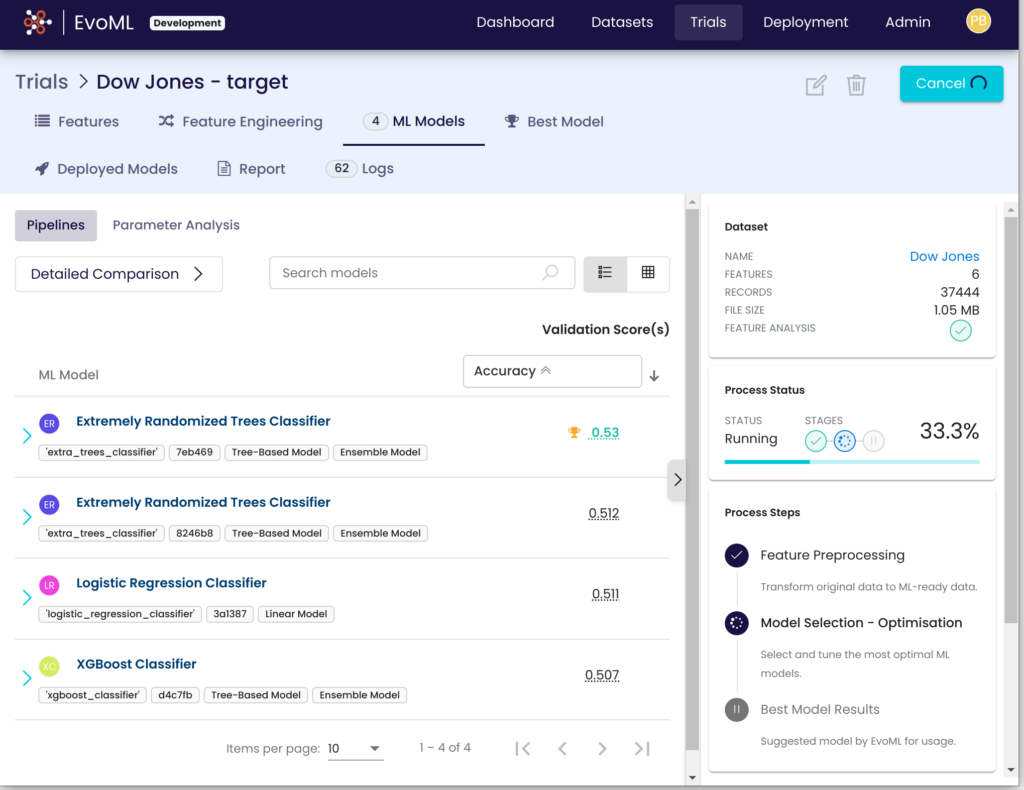

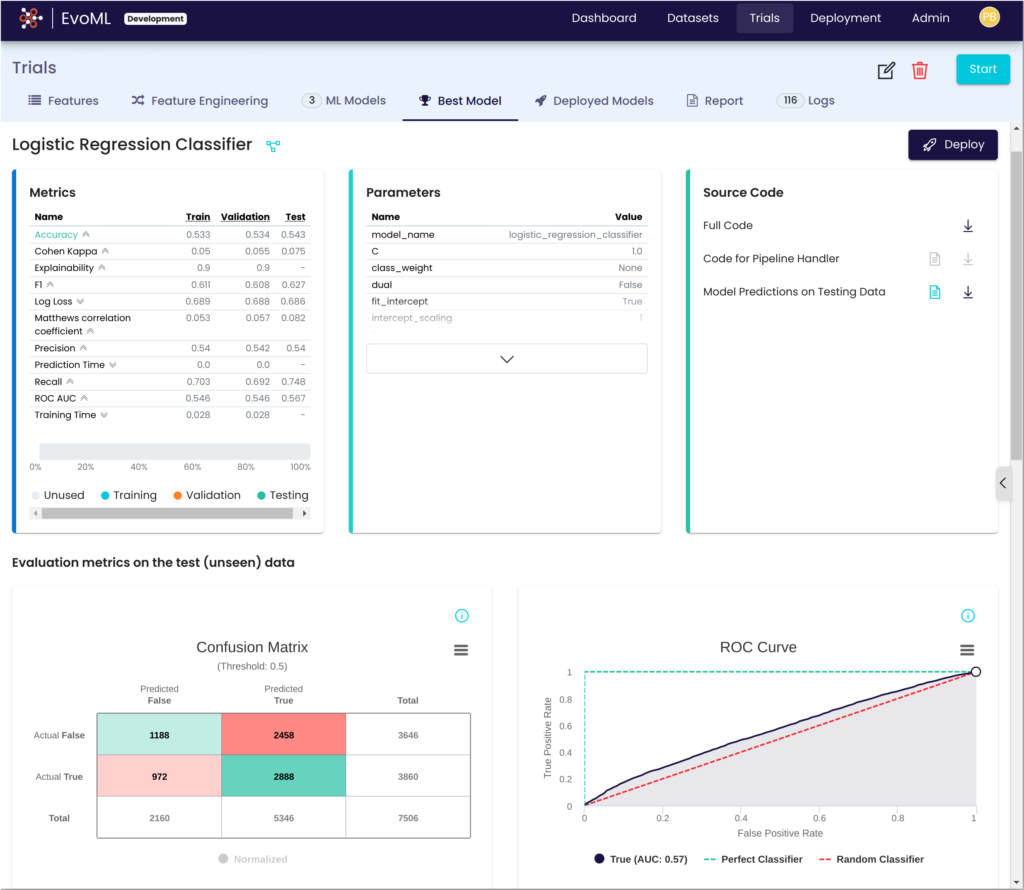

We first perform cross validation using data from between 1885 and 1950. We then test on data from 1950 to 1980. After comparing the LightGBM, random forest, and logistic regression classifiers, we find that logistic regression performs best, displaying an accuracy of 0.539 during model validation.

Figure. 6 Model generation and evaluation on evoML

Deploy the model and forecast

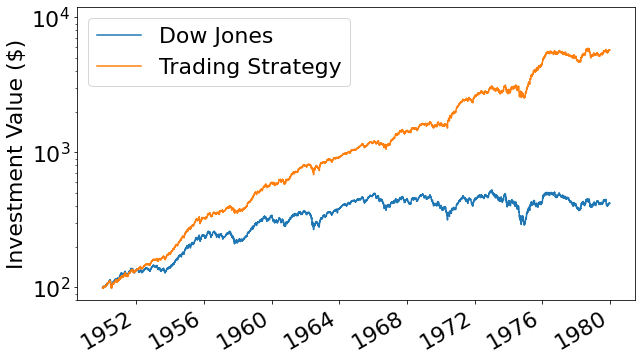

We devise a simple trading strategy. We start with $100. If we predict that the Dow Jones index will increase at the next time step, then we will either buy or hold (depending on whether or not our money was already invested). But if we predict a fall then we sell our stock (or do nothing if we are not invested). We test how this strategy would have performed between 1950 and 1980.

Figure 7. Trading strategy performance between 1950 and 1980

If we had simply bought shares in the constituent companies of the Dow Jones our investment would have risen in value to $419 by 1980. But using our strategy we could have increased the value of our investments to $5,708, beating the market by a factor of 13.6.

Optimise Model Constantly for Peak Performance

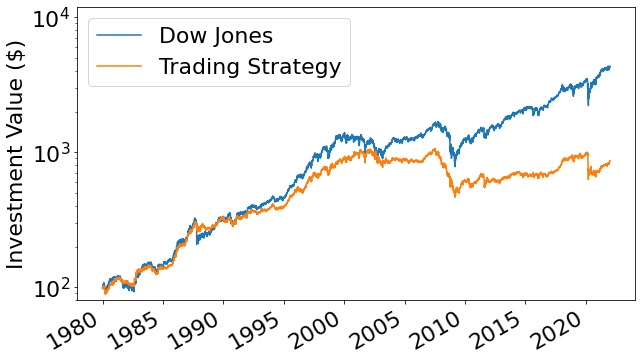

This basic strategy would have performed amazingly well. But how does it perform in the modern day? Unfortunately, not so well. If we repeat the same exercise, but we cover the period from 1980 till the start of 2022, we find that our initial $100 would have increased to $864 if we had followed our trading strategy, but would have increased to $4,333 if we had simply followed the market.

Figure 8. Trading strategy performance between 1980 and 2020

So our previous strategy is now performing very poorly. Why is this? It is well known that forecasting the stock market is a very challenging task. By studying it carefully we may be able to learn certain correlations between past and present behaviour, but there is no guarantee that these correlations will continue to hold in the future.

The laws governing the stock market are the product of human behaviour and can change over time. For example, the stock market is heavily influenced by our efforts to forecast it in a way which clearly doesn’t apply to other systems such as the motion of planets or the weather. If we believe a stock is going to rise in price then we may choose to buy it, but other traders may also be in the process of buying that stock because they have reached the same conclusion. The effect of all these buy orders is to increase the price of the stock and prevent us from buying it at the low price we intended. Every trader is in competition with every other trader, and advances in information technology and mathematics make older trading strategies obsolete. Therefore, it is not possible to simply choose a strategy and stick to it. Models must be constantly monitored and updated in order to ensure future success.

This is where evoML can help. By automatically optimising over dozens of potential models and hundreds of their parameters, we enable users to quickly adapt and trial new approaches based on their custom criteria (for example, accuracy, explainability, and prediction time). The best results can then be easily deployed along with their full source code so the user not only receives the best model for the job, but understands what that model is and how it works.

Figure 9. Model explanation with full code on evoML

We hope this article helps you better understand time series forecasting and use these concepts in your own time series projects for better results. If you are interested in trading and machine learning and want to learn more, check out our research paper Cryptocurrency trading: a comprehensive survey.

About the Author

Dr Paul Brookes | TurinTech Research

Data scientist and physicist.